Variational Neural Surfacing of 3D Sketches

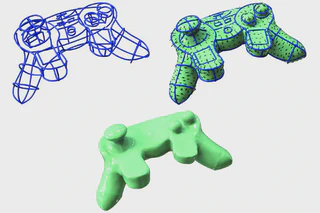

3D sketches are an effective representation of a 3D shape, convenient to create via modern Virtual or Augmented Reality (VR/AR) interfaces or from 2D sketches. For 3D sketches drawn by designers, human observers can consistently imagine the surface they imply, yet reconstructing such a surface with modern methods remains an open problem. Existing methods either assume a clean, well-structured 3D curve network (while in reality most 3D sketches are rough and unstructured), or make no effort to produce a surface consistent with perceptual observations. We propose a novel method that addresses this challenge by designing a system that reconstructs a surface that better aligns with human perception from a clean or rough set of 3D sketches. As the topology of the desired surface is unknown, we use an implicit neural surface representation, parameterized via its gradient field.

As suggested by previous perception and modelling literature, human observers tend to imagine the surface by interpreting some of the input strokes as representative flow-lines, related to the lines of curvature, and imagining the surface whose curvature agrees with those. Inspired by these observations, we design a novel loss that finds the surface with the smoothest principal curvature field aligned with the input strokes. Together with approximation and piecewise smoothness requirements, we formulate a variational optimization that performs robustly on a wide variety of 3D sketches. We validate our algorithmic choices via a series of qualitative and quantiative evaluations, and comparisons to ground truth surfaces and previous methods.